Every other day we see another striking headline highlighting the fast pace of development in the fields of artificial intelligence (AI), robotics and software. Robots acting as teaching assistants, artificial intelligence writing books and film scripts are just a few examples of such stunning news stories.

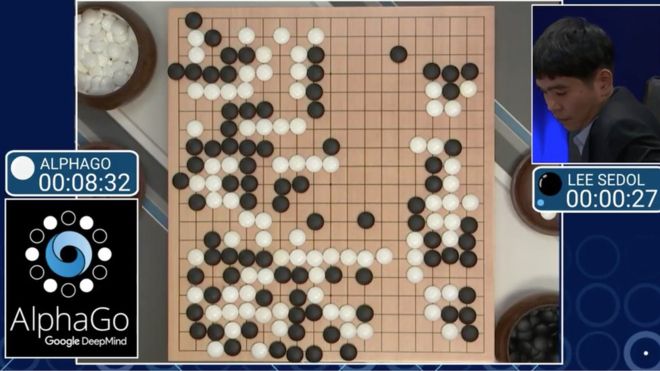

One recent prominent case was Google’s AlphaGo software, which won a game of Go against the world’s best player, Lee Sedol. Go is a hugely complex strategy board game originating from China, possessing more possible moves than the total number of atoms in the visible universe. Sedol’s loss was not the first and most probably won’t be the last time, humankind is defeated in a game where it was thought computers would never be able to match our abilities. In 1996, then world chess grandmaster Garry Kasparov was beaten by the IBM Deep Blue computer. 15 years later, in 2011, IBM was again making the headlines with their Watson computer winning a game of jeopardy.

Watson owes its impressive cognitive capabilities to the developments in the fields of natural-language processing, machine vision, computational statistics and more recently machine learning – where huge data sets are analysed to uncover patterns or detect anomalies.

When it is not beating mere mortals in a game of jeopardy, Watson gives advice to physicians; helping with diagnosis, evaluation of reports and prescription of treatments. Watson taps into a huge collection of medical journals, textbooks and patient medical records to come up with the best advice. IBM has also announced that Watson will be servicing customer-support call centres soon, which has already received the support of several banks.

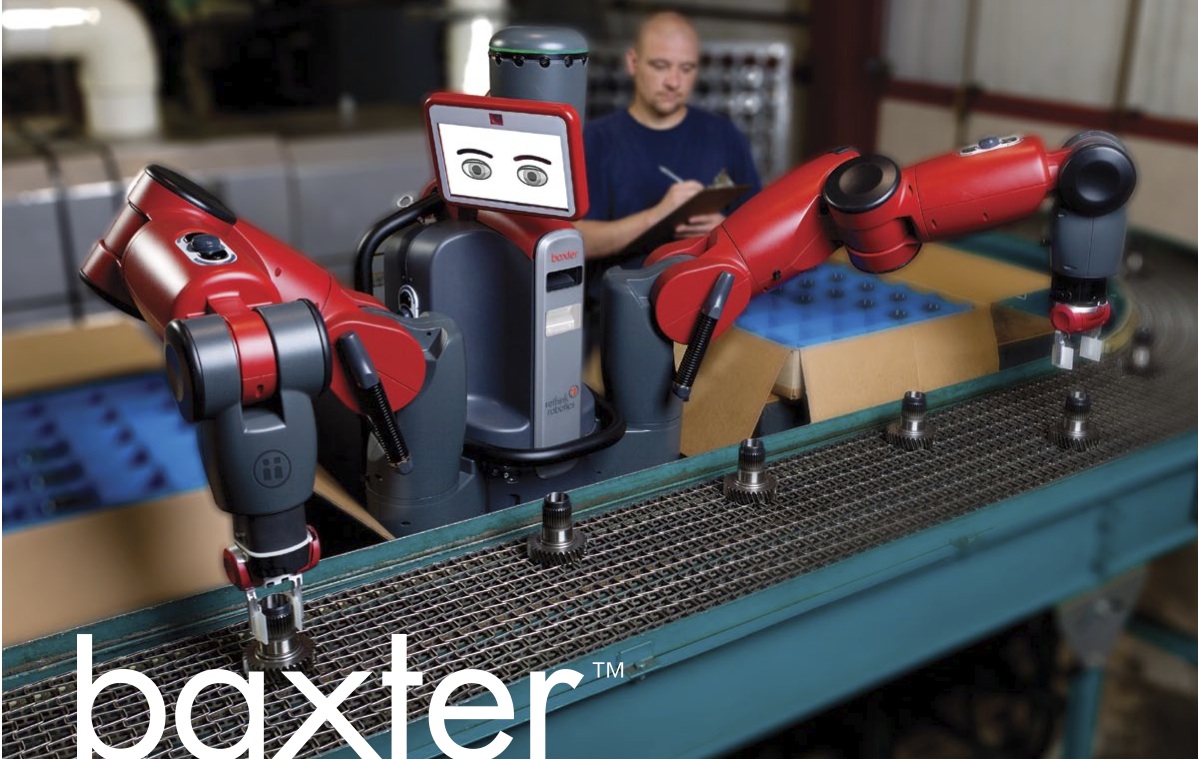

Disruptive innovation reaches beyond our hospitals and call-centers, meet Baxter, a $22,000 general purpose robot which can help factory workers by doing repetitive tasks at the assembly line. It requires no programming to start working, instead it learns from fellow human workers and memorizes work-flows. It can easily be upgraded with standard attachments to its arms, further expanding its bag of tricks. If Baxter is confused, its LCD screen displays a puzzled face to ask help from nearby workers. Rethink Robotics, the startup behind Baxter, claim robots won’t be replacing workers but will be a great companion to people at factories and warehouses. Amazon acquired robotics company Kiva Systems back in 2012 and at the end of 2015 it was using 30,000 Kiva robots across 13 warehouses. This investment has cut Amazon’s operating costs by a staggering 20%.

With the pace of innovation ramping up with significant haste, what will be the influence of AI and robotics on the future of the economy, job market and humankind as a whole?

Demystifying the AI

The dystopian view of ultra-intelligent, evil AI overlords is not a phenomenon which solely belongs to sci-fi films or literature. Many academics and business executives have voiced their concerns about the issue. In the 1960s, a British mathematician, Irving John Good, who was one of the cryptologists working alongside Alan Turing at Bletchley Park during WWII put forward the following statement about the threat of ultra-intelligent machines:

“Let an ultra-intelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultra-intelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion’, and the intelligence of man would be left far behind. Thus the first ultra-intelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control. It is curious that this point is made so seldom outside of science fiction. It is sometimes worthwhile to take science fiction seriously.”

Good here is claiming that the development of an “intelligence” that has the potential to learn and improve itself iteratively could spell the end of human dominance over the planet. An intelligence with an unlimited capacity for self improvement will be able push far beyond the limits of human knowledge, ultimately superseding humankind.

Good’s portrayal of an anthropocentric doomsday scenario has been featured in many sci-fi films over the years; A Space Odyssey (1968), Star Wars (1977), Blade Runner (1982), Star Trek: The Next Generation (1987), The Matrix (1999), Her (2013), Ex-Machina (2015) are some of the more well known examples.

Recently though, Good’s intelligence explosion argument has been brought out of the realms of sci-fi, as some of the greatest minds in science and technology have openly voiced their concerns to the public.

In 2014, Stephen Hawking stated that “the development of full artificial intelligence could spell the end of human race”. A similar, and perhaps even more alarming statement was given by Tesla’s founder, Elon Musk, who said, “with artificial intelligence humankind is summoning the demon”. Bill Gates also backed Musk and others by stating future developments in the field of artificial intelligence might result in a strong enough intelligence which could very well be a source of concern for humankind.

Should we fear the uprising of ultra-intelligent machines anytime soon?

In his 1993 essay, Vernor Vigne, a computer scientist and science fiction writer, described the concept of “machine intelligence matching and eventually surpassing human intelligence” as “singularity”. He also stated that singularity would happen sometime in between 2005 and 2030. Building on top of Vigne’s essay, Ray Kurzweil in his 2006 book, titled “The Singularity Is Near: When Humans Transcend Biology” claimed that it will take until 2045 to reach singularity. These bold predictions should be taken with a pinch of salt in the light of the current state of technology and also the historical evidence.

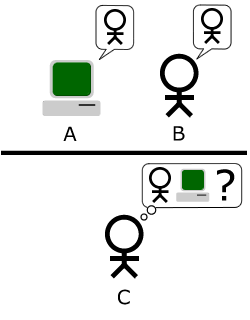

On the road to singularity, we still haven’t reached a significant milestone – an AI capable of passing the Turing Test. The Turing test was designed to check if an AI agent can match up to human intelligence. It works like this, you first put a human and a computer behind closed doors in separate rooms. Judges then try to determine which one is the computer and which one is the human by exchanging written messages with each room. If they fail to be able to identify the answers from a given room as being provided by a machine, so as to say, there is no distinction between which room is the human or the computer, then the AI passes the test. Every year a Turing test competition is held where the winner can claim the Loebner Prize gold medal. To this date, no artificial intelligence has managed to pass the test, although we might be very close due to recent developments in natural language processing (NLP) and machine learning. The Turing test is a key prerequisite for reaching singularity but it is far from the end goal.

Apart from the technical complications blurring the path to singularity, historical evidence also shows that AI is a field where over-promising and under-delivering has been the norm.

Ambitious claims were made by the field as early as the 1960s. American mathematician and computer scientist, John McCarthy, who also coined the term “artificial intelligence”, promised financial backers from the Pentagon, a machine which could match human intelligence would only take a decade to design and build. Quite clearly McCarthy’s grandiose vision never came to fruition, and his unfulfilled promise is not the only case of bold claims which would never quite come to pass. A story published by The New York Times back in 1958 reported that the Navy was planning to build a $100,000 “thinking machine” within one year, based on psychologist Frank Rosenblatt’s research on neural networks. This was yet another example of an ambitious artificial intelligence project which never saw the light of the day.

Road to Singularity

The road leading to singularity seems unclear and there are also some formidable hurdles along the way.

Singularitarians usually posit Moore’s law as a basis for eventually reaching artificial intelligence comparable with human cognitive skills. Moore’s law is an empirical claim put forward by Intel’s founder Gordon Moore in 1965. It states that the number of transistors on an integrated chip doubles around every two years, resulting in exponential growth of computational power. The fact that Moore’s law has held true for the last four decades was one of the main drivers of technological innovation. However, there is one catch, we are approaching the physical limits of manufacturing. As processor architecture is approaching the size of an atom, the number of transistors which can be fit into a fixed space will be limited. The stalling of Moore’s law hints at, there being no shortcut we can take to reach singularity. However, we can place our bets on a new technology called Quantum Computing which uses the quantum properties of matter to build a Turing machine, but the technology is far from being mature with current prototypes only running with a few qubits – a specific term to refer to “bits” in quantum computing. It is fair to say the time when we may reap the rewards from this technology is still decades away.

Another obstacle standing in the way is our limited understanding on how the human brain functions. The idea of reaching singularity is treated skeptically among artificial intelligence and neuroscience researchers. The basic mechanisms for biological intelligence are not completely understood, thus there is no formative model for human cognitive processes which computers can simulate.

Nevertheless, even though singularity seems like a far away dream for now, the public debate involving various actors ranging from academics and, business executives to philosophers, helps to ensure the required care is taken to safeguard our species from running into potential ramifications.

Last year Elon Musk donated $10m to the Future of Life Institute where he also serves as a member of the scientific advisory board. Donation will be used to fund research aimed at keeping AI beneficial for humanity. In 2016, Google’s DeepMind division teamed up with researchers from the University of Oxford to find ways to repeatedly and safely switch off AI agents. Their team published a peer reviewed paper introducing a safety framework for AI agents. Framework allows human operators to interrupt AI agents, while AI agents don’t learn how to prevent human induced interruptions, much like a big red button to halt the operation of AI.

Closing thoughts

It is crucial that AI agents are designed to be human-friendly, putting the best interests of humankind and the planet above everything else. Development in the field should be build on two pillars, integrity and transparency. Corporations should be transparent about the scope of their AI projects, measuring the impact of such developments and taking steps to adequately manage the systemic risks involved. Academics, subject experts and policy makers should contribute to the development of the field with integrity, acting as the moral compass which guides the interests of corporations. Only then can we pave the way to ethical AI which benefits humanity as a whole.

Note: This piece was published at the Italian magazine SmartWeek whilst I was studying at Bocconi University.